[ad_1]

In December, just before the head of Instagram Adam Mosseri spoke before the US Senate about the effects of his app on young users, the company announced plans to release security features and parental control. This morning, Instagram is updating a critical set of these features, which will default users under 16 to the app’s most restrictive content setting. It also encourages current teens to do the same, introducing a new “Check Settings” feature that guides teens to update their security and settings.

Changes are happening to global users across platforms amid increasing regulatory pressure on social media applications and their limited security issues.

During a Senate hearing last year, Mosseri defended Instagram’s safety record for teens over concerns raised by Facebook hacker Frances Haugen, whose posts painted a picture of a company that aware of the negative mental health effects of its app on its young users. . Although the company insisted at the time that it was necessary to take care of this category, in 2021 Instagram began to change for young people to use its app and what they see and do.

In March of this year, for example, Instagram introduced parental controls and safety features to protect young people from interactions with unknown adult users. In June, it updated its Extended Content Control, launched last year, to cover all the app’s boards where tips are available. This allowed users to manage sensitive content across areas such as Search, Videos, Accounts to Follow, Hashtag Pages and Guest Reviews.

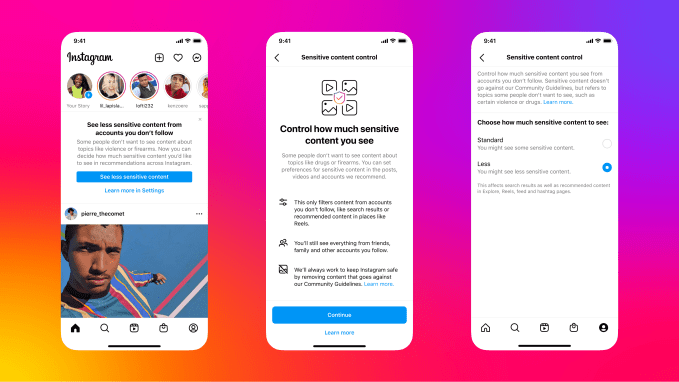

This Content Control feature is getting an update today.

The June release added features to allow users to change their settings for “sensitive content” — that is, content that may display graphic violence, sexual content, or information for restricted goods, etc. At that time, there are three options to block this content – “Higher,” “Lower” or “Standard.”

Previously, all young people under 18 could choose to see content in the “Standard” or “Less” categories. They couldn’t change to “More” until they were older.

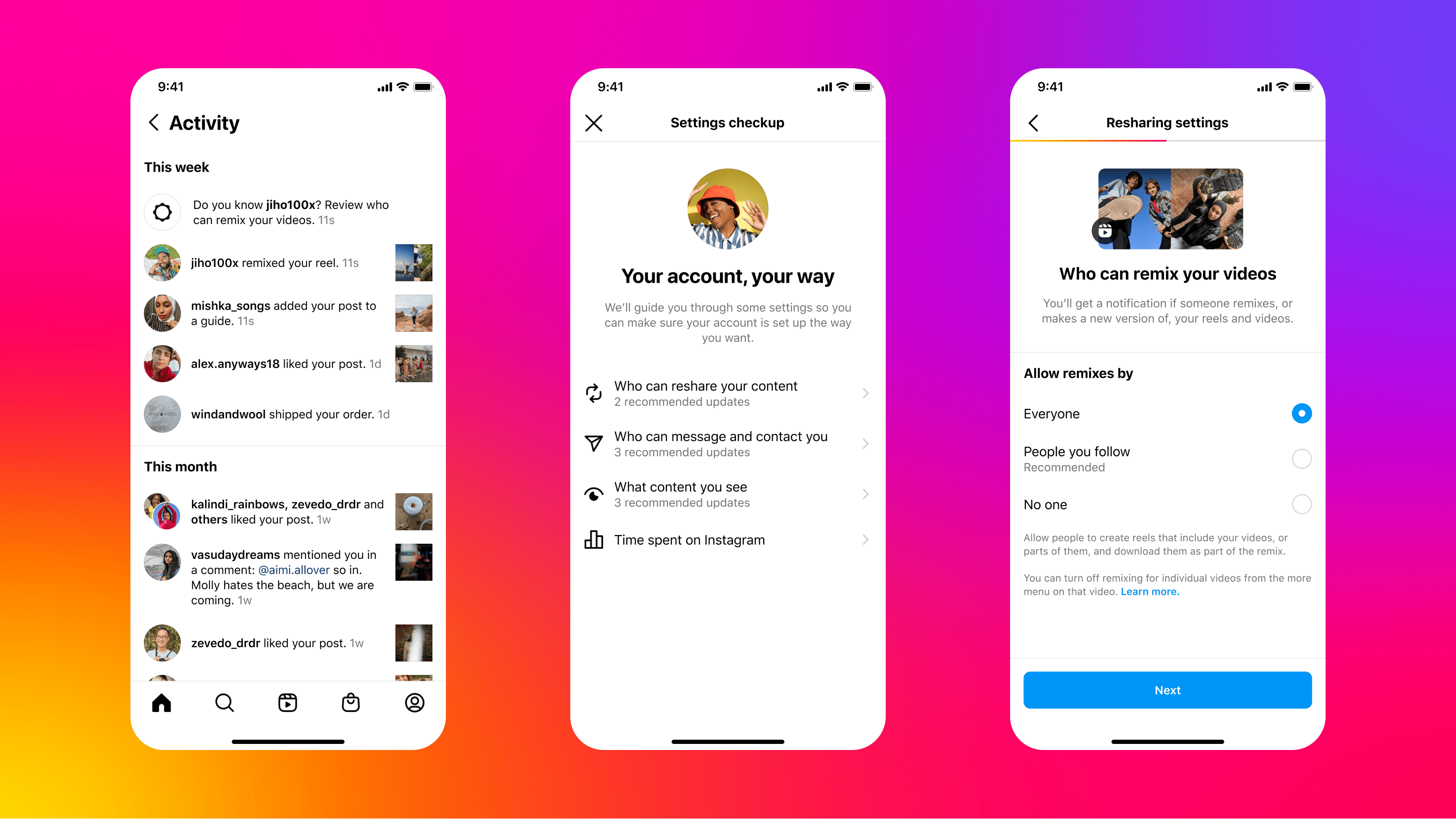

Image Credit: Instagram

Now, with today’s update, teens under 16 will default to “Younger” status if they’re new to Instagram. (They can still change this to “Standard” if they choose.)

Today’s young people are forced to force themselves – even if they don’t need it – to choose the “Less” status.

As usual, this applies to content and accounts found across Search, Browse, Hashtag Pages, Videos, Expert Feeds and Thoughtful Accounts, Instagram stories.

“The whole process is for groups to have a safer search experience, not to see sensitive content and automatically see less than all adults on the platform,” says Jeanne Moran, Instagram Policy Communications Manager, Youth Safety and Wellbeing. -Ko, in a conversation with TechCrunch. “…We encourage young people to choose ‘Less,’ but if they think they can handle ‘Standard’ they can.”

Of course, the extent to which this change will be effective depends on whether young people follow through on the idea early on — and whether they’re of the right age to be on the app to begin with. Many young users are lying about their birthdays when they log into apps to avoid defaulting to restrictive experiences. Instagram is trying to solve this problem by using AI and other technologies, including what users are now saying to provide their birthdays otherwise, AI checks for possible fake dates (for example, by searching for birthday messages where the age. t is the same as the date of birth on file) and, more recently, by tests of new tools such as video selfies .

The company hasn’t said how many accounts it will capture and edit using this technology, however.

Separately from reports about its Advanced Content Controls changes, the company is rolling out a new “Settings Check” designed to encourage all under-18 users on the app to update their security and settings.

This initiative focuses on giving young people the tools to manage things like who can re-share their content, who can message and comment on them, and their time on social media. on Instagram, and Additional Content Control settings.

The changes are part of a broader response in consumer technology to how apps can better serve younger users. The EU, in particular, has looked at social applications like Instagram through measures set out under its General Data Protection Regulation (GDPR) and the Digital Design Code. Regarding the use of young people in its app, Instagram is now waiting for a decision on a complaint about its handling of children’s data in the EU. Elsewhere, including the US, lawmakers are considering options that would better regulate social media and consumer technology in a similar fashion, including reforming COPPA and the implementation of new laws.

In response to the latest trends, Common Sense Media founder and CEO Jim Steyer said Instagram could do more to make its app more secure.

“The security measures for minors implemented by Instagram today are the right way, after a long time, to begin to deal with the dangers of young people from algorithmic optimization,” Steyer said. , in a prepared statement. “Accommodating young users to a safer version of the platform is an important step that can reduce the amount of harmful content that young people see on their feeds. However, efforts to creating a safe platform for young users is more difficult than this part and other work needs to be done.

He said that Instagram should prevent harmful and inappropriate content from the comments of young people and direct users to this platform if it is suspected that the user is under 16 years old, regardless of what User login. He then pushed Instagram to add offensive behavior to its list of “sensitive content,” including content that promotes violence and unhealthy food.

Instagram says Content Heart changes are happening. At this point, the Settings app is included in the test.

[ad_2]

Source link